AI Agents and Social Determinants of Health

Agents are playing an increasing role in Social Determinants of Health (SDoH), especially in urban planning, public health, and social support organizations, as they monitor data, reason through problems, and act across complex systems.

An AI agent is a software architecture pattern that is fundamentally different from a traditional application because:

- It decides what to do next, not just how to perform a fixed set of operations.

- It uses AI to orchestrate tools, services, and workflows.

- It is goal-driven rather than rule-driven.

The Evolution of Agent Architectures

In the 1990s to 2000s, AI commonly used rule-based logic, symbolic reasoning, and simple algorithms. This is often called “Good Old-Fashioned AI” (GOFAI) and did not involve machine learning. These technologies were used to develop Agent-Based Models (ABM) to support urban planning and public health. ABM refers to computational models that simulate the actions and interactions of multiple autonomous agents to understand emergent system-level behavior. The ABM itself would not be considered an agent but serves as a research model for studies such as economic simulations, traffic modeling, and epidemiological analysis.

An example is A Spatial Agent-Based Model for the Simulation of Adults’ Daily Walking Within a City, an ABM developed by researchers at Columbia University. The model simulates how adults make walking decisions based on urban design, land use, safety, and social networks.

From the 2010s forward, machine learning models were increasingly used to develop deliberative agents. Deliberative agents rely on decision logic that incorporates ML model predictions and then follow predetermined rules or workflows to act on those predictions.

As ML techniques matured, deliberative agents emerged to assist clinicians with diagnosis, risk assessment, and treatment recommendations. These systems increasingly incorporate SDoH variables such as income, transportation access, housing stability, and food security.

In the 2020s and beyond, agents began using large language models as their reasoning core. LLM-based agents can interact conversationally, access external tools, and maintain context across interactions. This shifts beyond the pattern matching in deliberative agents toward more flexible problem-solving. LLM-based agents have significant potential for SDoH improvements, but the field is still in its early stages.

Why LLM-based Agents Matter for SDoH

1. SDoH Relies on Messy, Multi-Source Data

Analysis of Social Determinants of Health often depends on integrating information from different agencies and domains. Housing records, zoning maps, transportation schedules, environmental sensors, clinical surveys, and socioeconomic indicators rarely share data formats. Agents can fetch data from multiple sources and reconcile schema differences across agencies and datasets without requiring manual intervention.

2. Analysis of SDoH Is Complex and Context-Rich

SDoH analysis can require a sequence of interdependent steps. The analysis must identify data sources, extract and prepare data, combine spatial and statistical methods, and present the results. An LLM-based agent has the ability to orchestrate these interdependent steps. When given a goal such as “Identify neighborhoods at high risk from extreme heat,” an agent can reason through each step: locate the relevant data, integrate it, run geospatial analyses, and produce maps and summaries suitable for planners.

3. Proactive Solutions for SDoH

Public health and urban agencies can benefit from detecting and analyzing changing conditions. Traditional applications may not provide timely analysis of events such as air-quality spikes, contaminated water alerts, severe heat events, EMS overload, or increases in housing violations.

Agents can monitor live data streams, APIs, and sensor networks. They can detect anomalies as they happen, summarize risks, and automatically notify planners or public-health officials. They can also trigger workflows, such as generating maps or preparing briefings for response teams.

ReAct Agents

Most LLM-based agents today use ReAct or ReAct-like architectures. ReAct (Reasoning + Acting) agents are an agent architecture pattern that leverages an LLM’s reasoning abilities with external tools, such as APIs, databases, or functions. ReAct agents interleave thought steps from an LLM with actions through tools, observe the results, and update their reasoning.

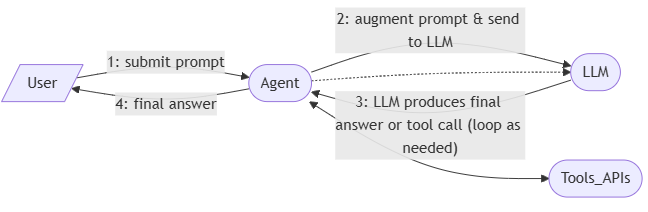

ReAct Agent Flow

Decisions and the orchestration of steps are provided by the LLM, while the agent server handles calling tools and sending results to components.

- User submits prompt: The user provides a task or question to the agent.

- Agent augments prompt and sends to LLM: The agent adds instructions, context, and tool descriptions, then asks the LLM to either produce a final answer or specify a tool call to gather additional information.

- LLM produces final answer or tool call: If a tool call is needed, the agent formats input, invokes the tool, and feeds the result back to the LLM. This loop continues until the LLM produces a final answer.

- Agent returns final answer: The agent delivers the response back to the user.

The following example shows an agent with access to several tools.

1. User Prompt

“Please generate a county-level map showing increases in air particulates (PM2.5) over the past five years.”

2. Agent augments prompt and sends to LLM

The agent adds:

- System instructions:

“You are an analytical assistant. Follow the pattern: reason, act, and observe. When needed, call tools using the specified schema. Use tool results to improve your response until you can provide a final answer.” - User Prompt

- Tool descriptions (e.g.,

get_air_quality_data,draw_map)

Then the agent asks the LLM:

“Given the user’s prompt, produce a final answer or specify a tool call to gather additional information.”

3. First LLM response (Reason step)

The LLM determines that PM2.5 county trends require data and outputs a tool call:

Call

get_air_quality_datawith{year_range: 2019–2024}.

4. Agent action (Act + Observe)

The agent formats input, calls the tool, and receives PM2.5 values over five years for all counties. The results are added to the context, and the LLM is called again.

5. Second LLM response (Reason)

The LLM analyzes the data and previous context and determines that a map visualization is needed:

Call

draw_mapwith{county_geojson, values: pm25_change}.

6. Agent action (Act + Observe)

The agent invokes the mapping tool and receives the generated map. The map output is added to the context, and the LLM is called again.

7. Final LLM response (Reason → Final Answer)

The LLM produces the concluding explanation:

“Here is the county map showing PM2.5 increases over the past five years…”

Reasoning

True reasoning involves the ability to abstract, infer, and generalize. LLMs are trained on next-token prediction, which means they learn to predict what word comes next based on patterns in their training. So are they performing “actual reasoning” or just pattern matching?

Some argue that LLMs rely on memorized patterns and cite examples where LLMs fail when challenged with novel concepts. Others argue that something resembling reasoning emerges from pattern matching combined with actions that provide feedback. They cite examples of LLMs solving many reasoning benchmarks with high accuracy.

Whatever the case, ReAct agents at least do something that is functionally similar to reasoning. This can be seen in the following behaviors:

- Self-Correction Through the iterative use of tools, ReAct agents become “grounded in reality” (at least the reality provided by the tools). The external feedback loop forces correction in a way that pure pattern matching does not.

- Multi-Step Problem Solving LLMs can fail in multi-step problem solving because early errors can compound, and the LLM may need to consider a large context. With tools, each step receives verification, and the entire solution path does not need to be derived from context. The LLM only needs to reason about what to do next.

- Structured Approach The ReAct system instructions impose a structure:

- Thought – must articulate reasoning

- Action – must commit to completion or a specific tool

- Observation – objective feedback

This externalized reasoning process may help the LLM stay on a path toward a good final response.

Agent Example: SDoH Data Analysis and Exploration

The SocialHealthAI/Data-Analytics-Assistant is a ReAct agent that queries data from a database or OpenStreetMap. The assistant can perform statistical analyses, such as correlations, and generate visualizations using charts and maps.

Example Prompt

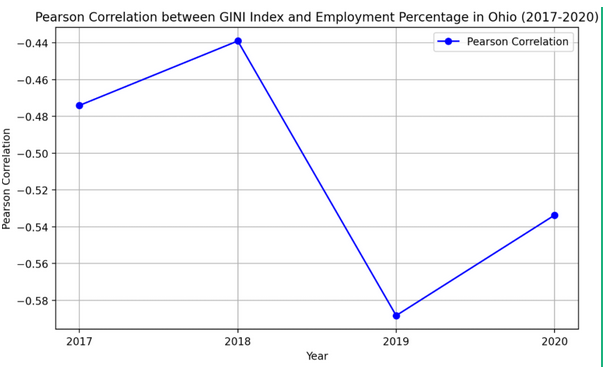

Show a line chart of the correlation between the GINI Index and the percentage of the civilian labor force that is employed for the state of Ohio in the years 2017 to 2020.

Final Answer

The line chart illustrates the Pearson correlation between the GINI Index and the percentage of the civilian labor force that is employed in Ohio from 2017 to 2020. The correlation values are negative, indicating an inverse relationship between the two variables over the given years.

Example Correlation Chart

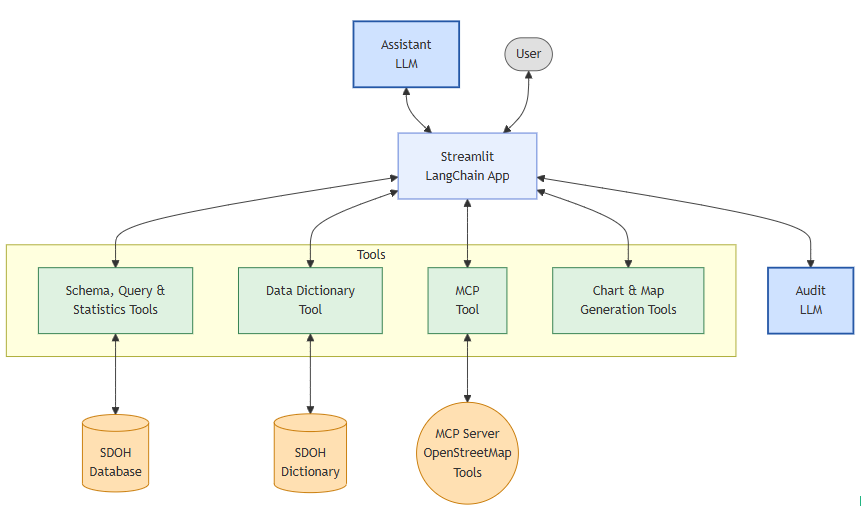

The Data Analytics Assistant architecture is shown below. The Assistant is implemented as a Streamlit LangChain application. The application manages the flow between the user, an LLM and tools. When the final response is produced the application provides an option to audit the process using a different LLM.

Analytic Assistant Tools

The chain of reasoning in the example prompt uses the following tools:

- Data Dictionary Tool – Identify columns with descriptions similar to “GINI Index” and “Percentage of civilian labor force that is employed.”

- Schema Tools – Verify that the identified columns exist in the database.

- Statistics Tools – Check whether the database supports correlation functions.

- Query Tools – Validate a SQL query using these columns and the correlation function with data from Ohio for the years 2017 to 2020.

- Query Tools – Execute the query.

- Chart Generation Tool – Generate the code to render the chart and provide a description of the chart.

Step 6 produces the final answer. The agent presents the chart description and runs the generated code to render the visualization.

Conclusion

LLM-based agents have the potential to change how Social Determinants of Health (SDoH) are analyzed and how insights can be synthesized. Tools like the open-source SocialHealthAI/Data-Analytics-Assistant provide a practical starting point for experimentation with ReAct agents.

While this article focuses on how LLM-based agents work and why they matter for SDoH analysis, implementing an agent system involves additional considerations. Organizations will need to make decisions about technical infrastructure, scalability requirements, costs and security protocols.

To find other SDoH-focused agent solutions, explore open-source repositories, research literature, and AI-for-good initiatives. By leveraging these resources, you can more effectively address the social factors that shape health outcomes.

No responses yet